Llama 31 Chat Template

Llama 31 Chat Template - This is the reason we added chat templates as a feature. You signed out in another tab or window. I want to instruct fine tune the base pretrained llama3.1 model. The eos_token is supposed to be at the end of every turn which is defined to be <|end_of_text|> in the config and <|eot_id|> in the chat_template. Llamafinetunebase upload chat_template.json with huggingface_hub. By default, llama_chat_apply_template() uses the template from a models metadata, tokenizer.chat_template. So can i use chat template of my interest to fine tune. Below, we take the default prompts and customize them to always answer, even if the context is not helpful. Instantly share code, notes, and snippets. Much like tokenization, different models expect very different input formats for chat. Reload to refresh your session. When you receive a tool call response, use the output to format an answer to the orginal. Reload to refresh your session. Llama 3.1 json tool calling chat template. I want to instruct fine tune the base pretrained llama3.1 model. You switched accounts on another tab. Llamafinetunebase upload chat_template.json with huggingface_hub. You signed out in another tab or window. Reload to refresh your session. Bfa19db verified about 2 months ago. Llama 3.1 json tool calling chat template. So can i use chat template of my interest to fine tune. Instantly share code, notes, and snippets. Much like tokenization, different models expect very different input formats for chat. You signed in with another tab or window. Reload to refresh your session. Reload to refresh your session. I want to instruct fine tune the base pretrained llama3.1 model. When you receive a tool call response, use the output to format an answer to the orginal. Below, we take the default prompts and customize them to always answer, even if the context is not helpful. Reload to refresh your session. You signed in with another tab or window. You signed in with another tab or window. Below, we take the default prompts and customize them to always answer, even if the context is not helpful. The llama_chat_apply_template() was added in #5538, which allows developers to format the chat into text prompt. Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided alongside the hf model. This is the reason we added chat templates as a feature. Reload to refresh your session. You signed in with another tab or window. Below, we take the default prompts and customize them to. Chat templates are part of the tokenizer for text. Upload images, audio, and videos by. But base model do not have chat template in huggingface. You signed out in another tab or window. You switched accounts on another tab. Reload to refresh your session. The llama_chat_apply_template() was added in #5538, which allows developers to format the chat into text prompt. By default, this function takes the template stored inside. You switched accounts on another tab. Bfa19db verified about 2 months ago. You switched accounts on another tab. The llama_chat_apply_template() was added in #5538, which allows developers to format the chat into text prompt. Reload to refresh your session. Reload to refresh your session. Reload to refresh your session. The eos_token is supposed to be at the end of every turn which is defined to be <|end_of_text|> in the config and <|eot_id|> in the chat_template. You signed out in another tab or window. Reload to refresh your session. Below, we take the default prompts and customize them to always answer, even if the context is not helpful. You switched. You switched accounts on another tab. Reload to refresh your session. Upload images, audio, and videos by. Much like tokenization, different models expect very different input formats for chat. Below, we take the default prompts and customize them to always answer, even if the context is not helpful. You signed in with another tab or window. The eos_token is supposed to be at the end of every turn which is defined to be <|end_of_text|> in the config and <|eot_id|> in the chat_template. So can i use chat template of my interest to fine tune. When you receive a tool call response, use the output to format an answer. Below, we take the default prompts and customize them to always answer, even if the context is not helpful. The llama_chat_apply_template() was added in #5538, which allows developers to format the chat into text prompt. You signed in with another tab or window. You signed out in another tab or window. Reload to refresh your session. You switched accounts on another tab. Bfa19db verified about 2 months ago. By default, this function takes the template stored inside. Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided alongside the hf model. You signed out in another tab or window. Upload images, audio, and videos by. The eos_token is supposed to be at the end of every turn which is defined to be <|end_of_text|> in the config and <|eot_id|> in the chat_template. I want to instruct fine tune the base pretrained llama3.1 model. You switched accounts on another tab. You signed in with another tab or window. Instantly share code, notes, and snippets.GitHub kuvaus/llamachat Simple chat program for LLaMa models

blackhole33/llamachat_template_10000sample at main

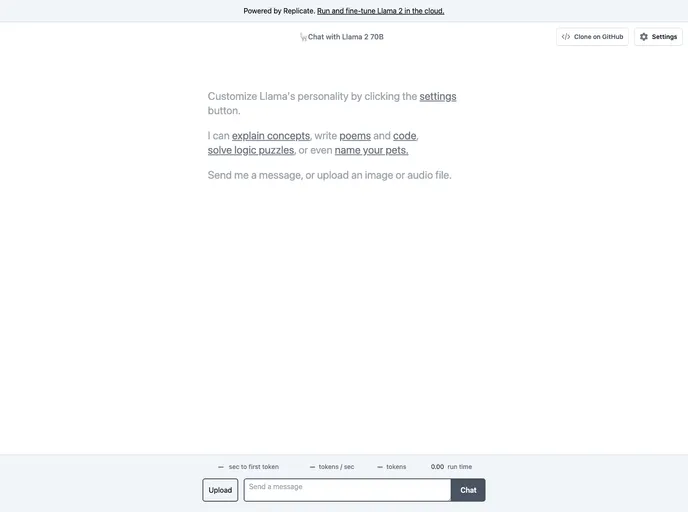

Llama38bInstruct Chatbot a Hugging Face Space by Kukedlc

antareepdey/Medical_chat_Llamachattemplate · Datasets at Hugging Face

Creating Virtual Assistance using with Llama2 7B Chat Model by

wangrice/ft_llama_chat_template · Hugging Face

Llama Chat Network Unity Asset Store

How to write a chat template for llama.cpp server? · Issue 5822

Llama Chat Tailwind Resources

LLaMAV2Chat70BGGML wrong prompt format in chat mode · Issue 3295

Reload To Refresh Your Session.

Reload To Refresh Your Session.

So Can I Use Chat Template Of My Interest To Fine Tune.

Reload To Refresh Your Session.

Related Post: